Boto is the Amazon Web Services (AWS) SDK for Python, which allows Python developers to write software that makes use of Amazon services like S3 and EC2. Boto provides an easy to use, object-oriented API as well as low-level direct service access

We will be using three different python modules.

- boto3

- datetime

#!/usr/bin/python

import boto3

import datetime

# Calculate timeLimit for EBS Snapshots

time_limit = datetime.datetime.now() - datetime.timedelta(days=7)

# ec2 object creation

ec2client = boto3.client('ec2')

# This function is used to retrive the ec2 instances from the given region

def get_ec2_instances():

instance_list = ec2client.describe_instances()

# Return Instances List

return instance_list

# This function is used to retrive the volumes list by instance id

def get_volumes_by_instance_id(instance_id):

volume_filters = [{"Name": "attachment.instance-id", "Values": [instance_id]}]

volume_list = ec2client.describe_volumes(Filters=volume_filters)

return volume_list

# This function is used to retrive the snapshots by volume id

def get_ebs_snapshots_by_volume_id(volume_id):

snapshot_filters = [{"Name": "volume-id", "Values": [volume_id]}]

snapshot_list = ec2client.describe_snapshots(Filters=snapshot_filters)

return snapshot_list

instance_list_info = get_ec2_instances()

for ec2_reservation in instance_list_info["Reservations"]:

for ec2_instance in ec2_reservation["Instances"]:

volume_list_info = get_volumes_by_instance_id(ec2_instance["InstanceId"])

for volume_info in volume_list_info["Volumes"]:

snapshot_list_info = get_ebs_snapshots_by_volume_id(volume_info["VolumeId"])

for snapshot_info in snapshot_list_info["Snapshots"]:

if snapshot_info['StartTime'].date() >= time_limit.date():

print("Instance ID :", ec2_instance["InstanceId"])

print("Volume ID :", volume_info["VolumeId"])

print(snapshot_info['SnapshotId'], snapshot_info['StartTime'].date())

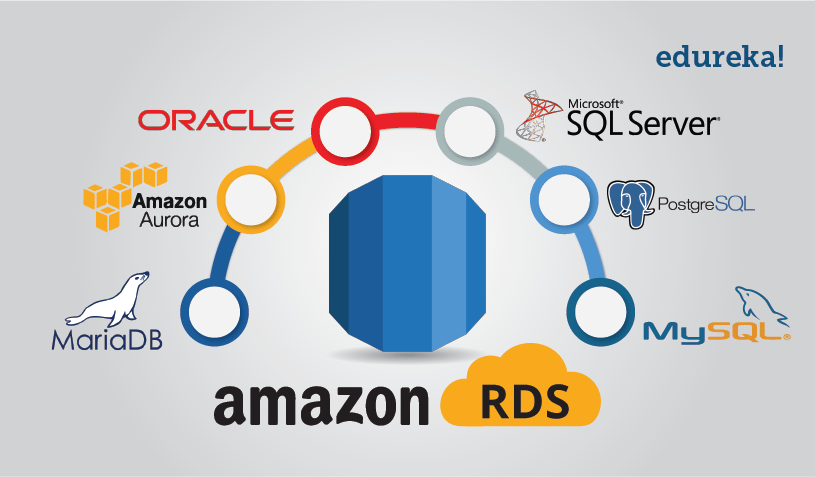

Today in this RDS AWS Tutorial we shall be discussing in detail about Amazon’s Relational Database Management service RDS AWS and shall also do a hands-on, but first let us understand why it came into existence.

Back in old days, you’d have to setup a back end server, research and install various softwares to support your application, after all of these tiring tasks you would have started developing your application.

Well amazing is, sorry amazon is here, Amazon Web Services (AWS) offers a service called RDS AWS (Relational Database Service), which does all these tasks (i.e. setup, operate, update) for you automatically.

You just have to select the database that you want to launch, and with just one click you have a back end server at your service which will be managed automatically!

Let’s take an example here, suppose you start a small company.

You want to launch an application which will be backed by a MySQL database and since there is a lot of Database work, there are chances that the development work will fall behind.

Moving along in this RDS AWS Tutorial, let’s discuss the components of RDS.

RDS AWS Components:

- DB Instances

- Regions and Availability Zones

- Security Groups

- DB Parameter Groups

- DB Option Groups

- Let’s discuss each one of them in detail

DB Instances

They are the building blocks of RDS. It is an isolated database environment in the cloud, which can contain multiple user-created databases, and can be accessed using the same tools and applications that one uses with a stand-alone database instance.

A DB Instance can be created using the AWS Management Console , the Amazon RDS API, or the AWS Command line Interface .

The computation and memory capacity of a DB Instance depends on the DB Instance class. For each DB Instance you can select from 5GB to 6TB of associated storage capacity.

The DB Instances are of the following types:

- Standard Instances (m4,m3)

- Memory Optimised (r3)

- Micro Instances (t2)

Regions and Availability Zones

The AWS resources are housed in highly available data centers, which are located in different areas of the world. This “area” is called a region.

Each region has multiple Availability Zones (AZ), they are distinct locations which are engineered to be isolated from the failure of other AZs.

You can deploy your DB Instance in multiple AZ, this ensures a failover i.e. in case one AZ goes down, there is a second to switch over to. The failover instance is called a standby, and the original instance is called the primary instance.

Security Groups

A security group controls the access to a DB Instance. It does so by specifying a range of IP addresses or the EC2 instances that you want to give access.

Amazon RDS uses 3 types of Security Groups:

- VPC Security Group

- It controls the DB Instance that is inside a VPC.

- EC2 Security Group

- It controls access to an EC2 Instance and can be used with a DB Instance.

- DB Security Group

- It controls the DB Instance that is not in a VPC.

DB Parameter groups

- It contains the engine configuration values that can be applied to one or more DB Instances of the same instance type.

- If you don’t apply a DB Parameter group to your instance, you are assigned a default Parameter group which has the default values.

DB Option groups

- Some DB engines offer tools that simplify managing your databases.

- RDS makes these tools available with the use of Option groups.

INTRODUCTION TO NAT

Welcome to the Introduction to NAT guide! This overview will give you a general understanding of Network Address Translation, or NAT, which is essential to the IPv4 networks we still use today. I mention IPv4 specifically because IPv6 renders NAT essentially purposeless as it provides enough IP addresses for everyone to have their own! But more on this later, let’s get started!

WHY NAT?

NAT stands for “Network Address Translation” and is not the same as a “Gnat,” which our readers from the Southern United States identify as a small winged insect that can wreak havoc on your picnic! Although NAT can be as irritating as a Gnat when configuring technology such as Video Conferencing, it is not the same spelling. The primary reason we have NAT is due to a lack of IPv4 IP address space to handle the massive number of devices we use every day.

An IPv4 address is 32 bits in size and contains 4,294,967,296 addresses. That sounds like a lot, but when you consider every server, computer, phone, smartwatch, electronic door lock, smart light bulb, etc., the number starts to sound a little smaller. If every one of these devices required a public IP address, we would have been out of IP addresses long ago! In fact, there are 2 BILLION (2,000,000,000) smartphones in existence alone! (http://thehub.smsglobal.com/smartphone-ownership-usage-and-penetration). So, how can an organization with thousands of servers give each one access to the internet without assigning public IP addresses? The answer is NAT!

HOW NAT WORKS

To understand how NAT works, we need to understand a few pieces of information:

PUBLIC VS. PRIVATE IP ADDRESSES

Private IP Addresses

Defined by “RFC 1918” as “non-routable” to the internet. This means that if a device is assigned one of these IP addresses, information from the internet cannot reach this device without a NAT in place. These addresses are as follows:

i. Class A: 10.0.0.0 – 10.255.255.255.255 (16,777,216 IP addresses)

ii. Class B: 172.16.0.0 – 172.31.255.255 (1,048,576 IP addresses)

iii. Class C: 192.168.0.0 – 192.168.255.255 (65,536 IP addresses)

Although the “class system” above can assist in choosing a proper range for your network, it is by no means a rule. If you want to create a network with 2 devices, you can use a Class A address and your boss probably won’t bat an eye, just make sure it has the proper “subnet mask” that doesn’t allocate too many addresses. (Subnet masks are a topic for another lesson.)

Public IP addresses

Public addresses are addresses that fall into any range not within the private IP guidelines. These are assigned by your ISP, who may have purchased their IP address blocks from one of the Regional Internet Registries. These registries are nearly out of IPv4 addresses, however, so many companies that need new IPv4 address blocks must purchase them from companies willing to sell ones they have already purchased.

PORTS AND SOCKETS

A “port” is a number between 0 and 65535. There are 1024 “well-known” port numbers that represent frequently used services, such as SSH (22) and HTTP (80).

A “Socket” is the IP address and the port together. For example: 192.168.1.1:22 is the SSH socket for the computer with IP address 192.168.1.1

You may be asking, “What do these things have to do with NAT?” Well I’ll tell you! NAT has 3 primary methods of operation:

1. Static: Static NAT is 1 private IP = 1 public IP. If your company has purchased 5 public IPs and they have 5 servers with private IPs, they will “map” each private IP to a public IP. Some organizations will map the public IP directly to the server, but NATting allows for the ability to change servers more easily and seamlessly without having the downtime required to remove the IP from one server and add it to another.

2. Dynamic: Dynamic NAT uses a pool of public IP addresses and maps them to private addresses in a random fashion, typically first come first served. If you have 5 servers with private IP addresses and 5 public IP addresses, the NAT gateway will assign public addresses to private addresses as the server needs them. So if one server with 192.168.1.1 tries to reach the internet, it will receive a public IP address when it attempts. When the server is finished communicating, it will return its IP back to the pool for another server to use.

3. Port Address Translation (Overloading): Port Address Translation is where the ports and sockets come into play. In “PAT,” each device with a private address connects using ports. So, one server may have its private IP address 192.168.1.1 mapped to socket 62.24.54.235:62838 and another with private IP address 192.168.1.2 mapped to 62.24.54.235:34839. Both have the same public IP address, but are using different ports. For incoming traffic, “Port Forwarding” is used. So, the Nat Gateway (typically your router) will see an incoming request for a service, such as SSH, and forward the connection to the appropriate port. If you have a server at 192.168.1.1 and you want to access it via SSH, you can forward port 22 to 192.168.1.1 and all requests to your public IP address will be forwarded to that server.

NAT EXAMPLES

Static NAT: An example of static NAT would be if someone named Tony Stark had a public phone number (Public IP address) and a private extension (Private IP address). If Steve Rogers called Tony at that public phone number, he would reach Tony without ever knowing Tony’s private extension. If Tony were to quit or get fired, another person could move into Tony’s desk and use their private extension. If Steve called that number back, he would be connected to Tony’s replacement.

Dynamic NAT: An example of Dynamic NAT would be if Tony and 4 other board members all had private phone numbers (Private IPs). Stark Industries has 5 public phone numbers (Public IPs). When any board member makes an outbound call, they are routed to whichever public line is open at the time. So the caller ID on the receiver’s end could show any one of the 5 public phone numbers (Public IP addresses) depending on which one was given to the caller.

Port Address Translation: Let’s say you need to “forward” a port to allow you to access a server from the outside world on port 22. In a static or dynamic NAT environment, this is not necessary; you just map the private IP address to the public IP address. But what if you only have one address? This is where port forwarding comes into play. Let’s use a phone operator example.

Let’s say Steve Rogers is trying to call Tony Stark. Steve Rogers only knows Tony Stark’s executive admin (Pepper Potts’) number, but does not have Tony’s private line. Pepper Potts’ number is the public IP address. Steve calls Pepper Potts who then connects Steve to Tony Stark. The caveat here is that the operator never gives Steve Tony’s phone number. In fact, Tony doesn’t have a public phone number and can ONLY be called by Pepper. This is the important aspect of NAT; it can provide an extra layer of security by only allowing necessary ports to be accessed without allowing anyone to connect to any port.

AWS NAT LAB!

We will run through an example in this guide, but check out the Linux Academy Lab for creating a NAT instance and NAT Gateway in AWS and try it for yourself.

We are going to create an AWS NAT gateway with just a few steps! This will allow your private EC2 instances to access the internet for things such as serving traffic or downloading updates. I am going to skip straight to the NAT configuration section. If you are unfamiliar with EC2 setup, please check out our AWS courses to get up to speed! There are also handy NAT tutorials within those as well!

1. First, we are going to access our VPC Dashboard and select “NAT Gateways”:

2. Next, we will select “Create NAT Gateway”

3. 3. Now we are going to select a *PUBLIC* subnet. This is the subnet that will allow traffic to access the NAT Gateway. After the NAT Gateway receives the traffic, it will process the traffic and send to the instances accordingly. We then need an Elastic IP for the NAT Gateway. If you haven’t already created one, click “Create New EIP” to have one created for you. Finally, we click “Create a NAT Gateway.”

4. After we create our NAT Gateway, we need to edit the route tables to allow traffic to access the gateway.

5. Once you are on the Route Tables screen, select the default route table created in your VPC. If you have created a custom one, use that. In this example, I have created a new route table called “test public.” Edit this route table and select “Add another route.” Enter “0.0.0.0/0” as the Destination, your NAT Gateway ID as the Target (yours will have a different ID than mine, but will begin with “nat-“) and click “Save.”

6. Now, we create a security group to ensure traffic is allowed to our NAT Gateway. Select “Security Groups” on the left side, select “Create Security Group,” give it a descriptive Name tag such as “NAT_SG,” a Group name of the same, a Description, and select the proper VPC. Then, select “Yes, Create.”

7. Finally, we add the appropriate “Inbound Rules.” Outbound rules are set to “Allow All” by default. If you wish to change this, you can do so by utilizing the same techniques as the inbound rules. Select your “NAT_SG” security group, click on the “Inbound Rules” tab, click “Add another rule.” In this example, we are going to allow HTTP traffic to this instance. We select “HTTP (80)” which automatically selects the TCP (6) protocol and a “Port Range” of 80. After this, we select the Source, which is your private subnet that contains the instances that require access. In this case, the subnet is “sg-1eb4f463.” You can also use the subnet as the source, if you wish. This will allow the private instances to access the NAT which has a route to the outside world, allowing your instances to access the internet!

Great! You have created your first NAT! Now that you have created it, you can access instances in your private subnets and update them, download packages, and serve websites using HTTP!

Welcome to the Introduction to NAT guide! This overview will give you a general understanding of Network Address Translation, or NAT, which is essential to the IPv4 networks we still use today. I mention IPv4 specifically because IPv6 renders NAT essentially purposeless as it provides enough IP addresses for everyone to have their own! But more on this later, let’s get started!

WHY NAT?

NAT stands for “Network Address Translation” and is not the same as a “Gnat,” which our readers from the Southern United States identify as a small winged insect that can wreak havoc on your picnic! Although NAT can be as irritating as a Gnat when configuring technology such as Video Conferencing, it is not the same spelling. The primary reason we have NAT is due to a lack of IPv4 IP address space to handle the massive number of devices we use every day.

An IPv4 address is 32 bits in size and contains 4,294,967,296 addresses. That sounds like a lot, but when you consider every server, computer, phone, smartwatch, electronic door lock, smart light bulb, etc., the number starts to sound a little smaller. If every one of these devices required a public IP address, we would have been out of IP addresses long ago! In fact, there are 2 BILLION (2,000,000,000) smartphones in existence alone! (http://thehub.smsglobal.com/smartphone-ownership-usage-and-penetration). So, how can an organization with thousands of servers give each one access to the internet without assigning public IP addresses? The answer is NAT!

HOW NAT WORKS

To understand how NAT works, we need to understand a few pieces of information:

PUBLIC VS. PRIVATE IP ADDRESSES

Private IP Addresses

Defined by “RFC 1918” as “non-routable” to the internet. This means that if a device is assigned one of these IP addresses, information from the internet cannot reach this device without a NAT in place. These addresses are as follows:

i. Class A: 10.0.0.0 – 10.255.255.255.255 (16,777,216 IP addresses)

ii. Class B: 172.16.0.0 – 172.31.255.255 (1,048,576 IP addresses)

iii. Class C: 192.168.0.0 – 192.168.255.255 (65,536 IP addresses)

Although the “class system” above can assist in choosing a proper range for your network, it is by no means a rule. If you want to create a network with 2 devices, you can use a Class A address and your boss probably won’t bat an eye, just make sure it has the proper “subnet mask” that doesn’t allocate too many addresses. (Subnet masks are a topic for another lesson.)

Public IP addresses

Public addresses are addresses that fall into any range not within the private IP guidelines. These are assigned by your ISP, who may have purchased their IP address blocks from one of the Regional Internet Registries. These registries are nearly out of IPv4 addresses, however, so many companies that need new IPv4 address blocks must purchase them from companies willing to sell ones they have already purchased.

PORTS AND SOCKETS

A “port” is a number between 0 and 65535. There are 1024 “well-known” port numbers that represent frequently used services, such as SSH (22) and HTTP (80).

A “Socket” is the IP address and the port together. For example: 192.168.1.1:22 is the SSH socket for the computer with IP address 192.168.1.1

You may be asking, “What do these things have to do with NAT?” Well I’ll tell you! NAT has 3 primary methods of operation:

1. Static: Static NAT is 1 private IP = 1 public IP. If your company has purchased 5 public IPs and they have 5 servers with private IPs, they will “map” each private IP to a public IP. Some organizations will map the public IP directly to the server, but NATting allows for the ability to change servers more easily and seamlessly without having the downtime required to remove the IP from one server and add it to another.

2. Dynamic: Dynamic NAT uses a pool of public IP addresses and maps them to private addresses in a random fashion, typically first come first served. If you have 5 servers with private IP addresses and 5 public IP addresses, the NAT gateway will assign public addresses to private addresses as the server needs them. So if one server with 192.168.1.1 tries to reach the internet, it will receive a public IP address when it attempts. When the server is finished communicating, it will return its IP back to the pool for another server to use.

3. Port Address Translation (Overloading): Port Address Translation is where the ports and sockets come into play. In “PAT,” each device with a private address connects using ports. So, one server may have its private IP address 192.168.1.1 mapped to socket 62.24.54.235:62838 and another with private IP address 192.168.1.2 mapped to 62.24.54.235:34839. Both have the same public IP address, but are using different ports. For incoming traffic, “Port Forwarding” is used. So, the Nat Gateway (typically your router) will see an incoming request for a service, such as SSH, and forward the connection to the appropriate port. If you have a server at 192.168.1.1 and you want to access it via SSH, you can forward port 22 to 192.168.1.1 and all requests to your public IP address will be forwarded to that server.

NAT EXAMPLES

Static NAT: An example of static NAT would be if someone named Tony Stark had a public phone number (Public IP address) and a private extension (Private IP address). If Steve Rogers called Tony at that public phone number, he would reach Tony without ever knowing Tony’s private extension. If Tony were to quit or get fired, another person could move into Tony’s desk and use their private extension. If Steve called that number back, he would be connected to Tony’s replacement.

Dynamic NAT: An example of Dynamic NAT would be if Tony and 4 other board members all had private phone numbers (Private IPs). Stark Industries has 5 public phone numbers (Public IPs). When any board member makes an outbound call, they are routed to whichever public line is open at the time. So the caller ID on the receiver’s end could show any one of the 5 public phone numbers (Public IP addresses) depending on which one was given to the caller.

Port Address Translation: Let’s say you need to “forward” a port to allow you to access a server from the outside world on port 22. In a static or dynamic NAT environment, this is not necessary; you just map the private IP address to the public IP address. But what if you only have one address? This is where port forwarding comes into play. Let’s use a phone operator example.

Let’s say Steve Rogers is trying to call Tony Stark. Steve Rogers only knows Tony Stark’s executive admin (Pepper Potts’) number, but does not have Tony’s private line. Pepper Potts’ number is the public IP address. Steve calls Pepper Potts who then connects Steve to Tony Stark. The caveat here is that the operator never gives Steve Tony’s phone number. In fact, Tony doesn’t have a public phone number and can ONLY be called by Pepper. This is the important aspect of NAT; it can provide an extra layer of security by only allowing necessary ports to be accessed without allowing anyone to connect to any port.

AWS NAT LAB!

We will run through an example in this guide, but check out the Linux Academy Lab for creating a NAT instance and NAT Gateway in AWS and try it for yourself.

We are going to create an AWS NAT gateway with just a few steps! This will allow your private EC2 instances to access the internet for things such as serving traffic or downloading updates. I am going to skip straight to the NAT configuration section. If you are unfamiliar with EC2 setup, please check out our AWS courses to get up to speed! There are also handy NAT tutorials within those as well!

1. First, we are going to access our VPC Dashboard and select “NAT Gateways”:

2. Next, we will select “Create NAT Gateway”

3. 3. Now we are going to select a *PUBLIC* subnet. This is the subnet that will allow traffic to access the NAT Gateway. After the NAT Gateway receives the traffic, it will process the traffic and send to the instances accordingly. We then need an Elastic IP for the NAT Gateway. If you haven’t already created one, click “Create New EIP” to have one created for you. Finally, we click “Create a NAT Gateway.”

4. After we create our NAT Gateway, we need to edit the route tables to allow traffic to access the gateway.

5. Once you are on the Route Tables screen, select the default route table created in your VPC. If you have created a custom one, use that. In this example, I have created a new route table called “test public.” Edit this route table and select “Add another route.” Enter “0.0.0.0/0” as the Destination, your NAT Gateway ID as the Target (yours will have a different ID than mine, but will begin with “nat-“) and click “Save.”

6. Now, we create a security group to ensure traffic is allowed to our NAT Gateway. Select “Security Groups” on the left side, select “Create Security Group,” give it a descriptive Name tag such as “NAT_SG,” a Group name of the same, a Description, and select the proper VPC. Then, select “Yes, Create.”

7. Finally, we add the appropriate “Inbound Rules.” Outbound rules are set to “Allow All” by default. If you wish to change this, you can do so by utilizing the same techniques as the inbound rules. Select your “NAT_SG” security group, click on the “Inbound Rules” tab, click “Add another rule.” In this example, we are going to allow HTTP traffic to this instance. We select “HTTP (80)” which automatically selects the TCP (6) protocol and a “Port Range” of 80. After this, we select the Source, which is your private subnet that contains the instances that require access. In this case, the subnet is “sg-1eb4f463.” You can also use the subnet as the source, if you wish. This will allow the private instances to access the NAT which has a route to the outside world, allowing your instances to access the internet!

Great! You have created your first NAT! Now that you have created it, you can access instances in your private subnets and update them, download packages, and serve websites using HTTP!

In this post, we will try to understand basic terminology used in Puppet which will help us understand Puppet

- PUPPET MASTER

This is our Puppet server which takes care of puppet clients which connect to it. It serves required data to clients and gets reports from clients once our configurations are done on them. This acts as a centralized machine for all your Puppet activity by default.

- MASTERLESS PUPPET

Puppet configuration management tool can be deployed in two ways. One is Client-server model and the other is an independent node model or a masterless setup. Depends on your requirement we can use one of these models. Many people may have a question how is it possible to configure Masterless-puppet. For those people, “puppet apply /path/to/my/site.pp” command is the answer. We can apply puppet catalogs if we have puppet manifests locally available using puppet apply command. We will see more about this command in future.

- PUPPETMASTERD

Running state of Puppet server is called as puppetmasterd

- PUPPET AGENT

Running state of Puppet client software. This software is useful for

1) Gathering facts: Getting vast system details like OS type, version, RAM details, CPU details and much more using installed facter tool.

2) Send those details to master for getting required compiled configuration details(catalogs)

3) Getting compiled configuration details from puppet master

4) Executing those compiled configurations at a designated time.

5) Collecting and sending reports of this expected configuration on the client.

- PUPPET NODE

Puppet clients are called as nodes.

- RESOURCES

These are files/configs which can be used for changing settings at nodes.

- FACTER

Reporting tool at nodes, which reports about OS/HW/SW types etc to puppet master about the node where it is installed.

- CLIPPED OUTPUT OF FACTER COMMAND:

architecture => amd64

blockdevices => sda,sdb

boardmanufacturer => Hewlett-Packard

fqdn => linuxnix

gid => root

hardwareisa => x86_64

hostname => linuxnix

id => root

interfaces => eth0,lo,virbr0,virbr0_nic,wlan0

ipaddress => 192.168.122.1

kernel => Linux

kernelmajversion => 4.4

kernelrelease => 4.4.0-31-generic

kernelversion => 4.4.0

memoryfree => 2.41 GB

memorysize => 7.58 GB

memorysize_mb => 7757.53

- ATTRIBUTE

Attributes are used to specify the state desired for a given configuration resource. We will see more about this in our coming posts.

- PUPPET MANIFESTS

A file containing code written in the Puppet DSL language, and named with the .pp file extension. All manifests are stored in /etc/puppet/manifests or /etc/puppet/modules/

- CATALOGS

Compiled form of manifests.

- PUPPET MODULES

Modules are a bigger form of manifests. Every manifest in a module should define a single class or defined type.

- FILES

Physical files you can serve out to your agents through puppet. These are stored in /etc/puppet/files or /etc/puppet/modules/

- TEMPLATES

Template files contain puppet code with variables which can populate variable values depending on node details.

- CLASSES

Collections of resources

- DEFINITIONS

Composite collections of resources

Puppet is a Configuration Management tool that is used for deploying, configuring and managing servers. It performs the following functions:

- Defining distinct configurations for each and every host, and continuously checking and confirming whether the required configuration is in place and is not altered (if altered Puppet will revert back to the required configuration) on the host.

- Dynamic scaling-up and scaling-down of machines.

- Providing control over all your configured machines, so a centralized (master-server or repo-based) change gets propagated to all, automatically.

Puppet uses a Master Slave architecture in which the Master and Slave communicate through a secure encrypted channel with the help of SSL

Configuration Management

System Administrators usually perform repetitive tasks such as installing servers, configuring those servers, etc. They can automate this task, by writing scripts, but it is a very hectic job when you are working on a large infrastructure.

To solve this problem, Configuration Management was introduced. Configuration Management is the practice of handling changes systematically so that a system maintains its integrity over time. Configuration Management (CM) ensures that the current design and build state of the system is known, good & trusted; and doesn’t rely on the tacit knowledge of the development team. It allows access to an accurate historical record of system state for project management and audit purposes. Configuration Management overcame the following challenges:

- Figuring out which components to change when requirements change.

- Redoing an implementation because the requirements have changed since the last implementation.

- Reverting to a previous version of the component if you have replaced with a new but flawed version.

WHY WE NEED CONFIGURATION MANAGEMENT TOOLS?

- Replacing the wrong component because you couldn’t accurately determine which component needed replacing

- As a system administrator our job is a tedious one with repetitive tasks. As infrastructure grows these tedious tasks will take a big chunk of our time. This is where automation comes in to picture. Many people started using scripting languages like Shell scripting, Perl scripting, Python scripting, Ruby, PHP or even new language Go to automate repeated tasks. But our infrastructure growth did not stopped to just couple of 100 machines and growing exponentially with the advent of Cloud. This is where we require centralized configuration management tools like Puppet and Ansible. These tools allows us to configure any server or even multiple servers remotly and with ease. This is a kind of big brother to fabric module in Python. Below are some advantages.

- Saves the time,

- Repetitive task removal at a large scale.

- Can work in homogeneous environments.

- With puppet like tools we can build entire infrastructure without manual intervention in hours time which normally takes weeks or even months to set-up with huge financial inputs.

- WHAT ARE DISADVANTAGES OF SCRIPTING?

- A scripting language can be used to automate simple to medium tasks but if you want to build complete infrastructure we have to take help from configuration management tools which can talk to different parts of an infrastructure. As these tools implemented different modules to talk to different parts of IT infra structure.

- WHY WE REQUIRE PUPPET?

- Though cfengine is the first configuration tool it did not capture market. Puppet did that and most companies uses it now. But from my experience puppet is good for devops and Ansible is good for system administrators.

- WHO DEVELOPED PUPPET?

- Luke Kanies is a system admin who thought scripting is limiting factor to automate system admin tasks and developed puppet as an open source project.

- WHOM PUPPET IS MEANT FOR?

- Puppet is meant for all the people who like to automate infrastructure tasks(devops) or orchestration tasks(sysops).

- WHERE WE CAN/CAN NOT USE PUPPET?

- Ok, is puppet/Ansible only solution for system administration? The answer is no. There are still some tasks which we have to depend on manual system administration or scripting.